What Is LLM Grounding? A Developer's Guide

LLM grounding connects language models to external data so outputs are based on facts, not training patterns. Learn the techniques, architecture, and tools.

Ask an AI coding assistant to use the useAgent hook from Vercel’s AI SDK. If the model was trained before v6 shipped, you’ll get a confident answer referencing Experimental_Agent — an API that was renamed months ago. The code looks right. The types look right. It’s wrong.

This is what happens when a language model has no connection to current reality. LLMs are powerful pattern matchers trained on internet snapshots. They have no access to your docs, your APIs, or your data. When they lack information, they fill the gap with plausible-sounding fiction. This isn’t a bug — it’s a fundamental limitation of how these models work. Researchers call it “hallucination,” but that implies randomness. In practice, it’s worse: the model generates answers that are structurally correct but factually outdated, and there’s nothing in the output that tells you which parts are real.

Grounding is the architectural solution. Instead of hoping the training data is current enough, you connect the model to real data sources at the time it generates a response. The result: answers based on facts, not patterns.

What is LLM grounding?

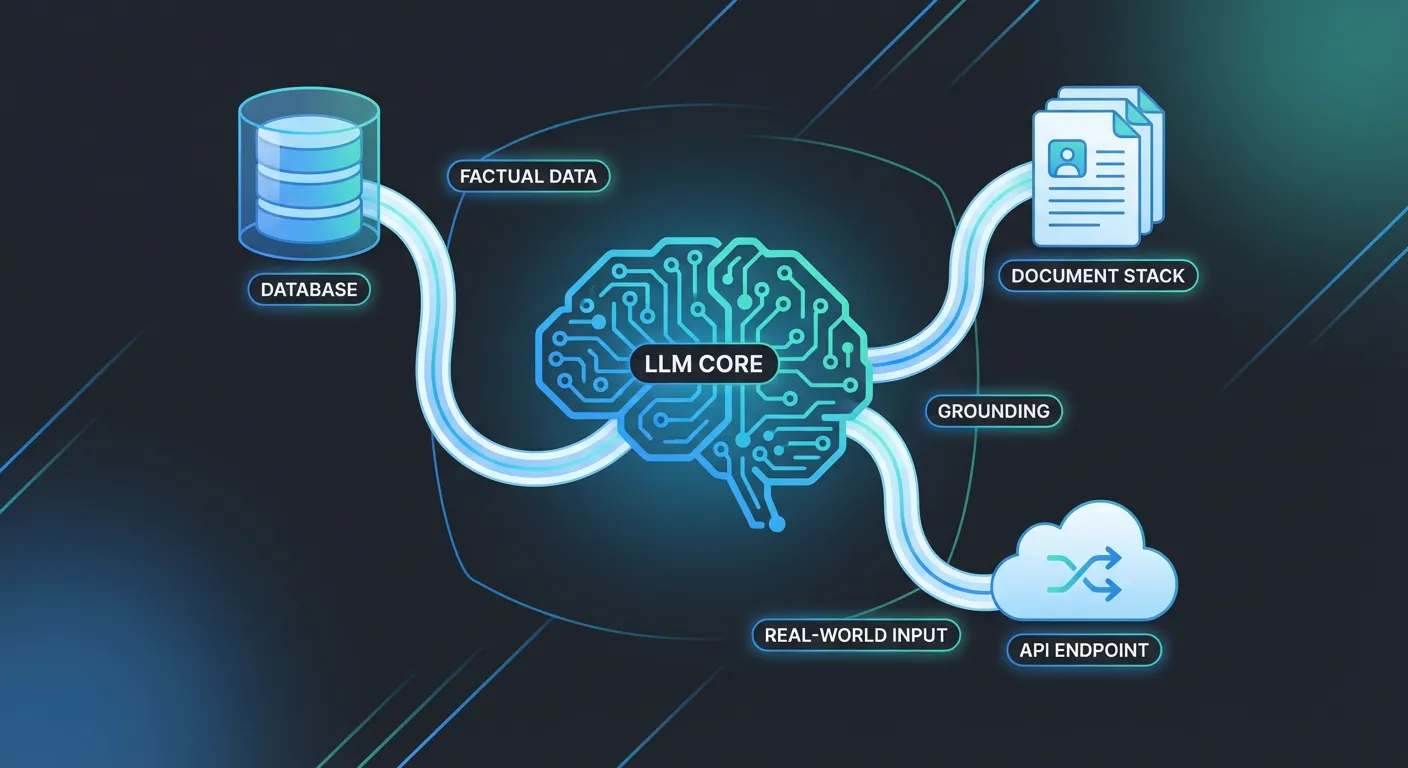

LLM grounding is the process of connecting a language model to external data sources at inference time, so it can retrieve and reason over real information instead of relying solely on its training data.

It’s not a single technique — it’s an umbrella term for a category of approaches:

- Retrieval-Augmented Generation (RAG) — fetching relevant documents before generation. The model searches a knowledge base, retrieves matching content, and uses it as context for its response.

- Tool use / function calling — letting the model query APIs, databases, or services directly. Instead of guessing a price, it calls a pricing API.

- Knowledge retrieval — structured access to specific facts through knowledge graphs, lookup tables, or semantic search indexes. Not just document chunks, but precise answers.

Grounding is the goal. RAG, tool use, and knowledge retrieval are techniques to achieve it. Most production systems combine more than one.

Types of grounding by data source

Not all grounding is the same. Different data sources have different characteristics, and the right approach depends on what kind of data your model needs.

Static documentation — library docs, API references, internal guides. Changes infrequently (per release cycle). Best approach: index locally, serve via search. Full-text search or vector embeddings work well here because the content is stable enough to pre-index. Read more about local-first documentation for a deep dive on this approach.

Live operational data — prices, inventory, system status, feature flags. Changes continuously (hours, minutes, or seconds). Best approach: query via API or database at request time with appropriate cache TTLs. RAG doesn’t work well here because by the time you’ve embedded and indexed the data, it’s already stale. A customer-facing agent quoting yesterday’s prices is worse than quoting no price at all.

Structured knowledge — facts, relationships, taxonomies, entity data. Best approach: knowledge graphs or semantic lookup tools that return structured JSON rather than document fragments. When the model needs “the current price of SKU-1234,” it needs a number, not a paragraph that might contain a number.

The distinction matters because mixing up approaches creates subtle failures. Embedding live pricing data into a vector database gives you yesterday’s prices with today’s confidence. Querying a documentation API in real-time adds latency and fragility where a local index would be instant and reliable.

The grounding architecture

When an AI agent receives a query, a grounded system follows a consistent pattern:

- The agent identifies what external data it needs. Is this a question about API usage (docs), current pricing (live data), or general knowledge (training data is fine)?

- The retrieval layer fetches relevant context. This could be a local doc search, an API call, a database query — or all three.

- Context is injected into the prompt alongside the user’s query. The model now has both the question and the facts needed to answer it.

- The model generates a response grounded in retrieved facts rather than training-time patterns.

- (Optional) A verification layer checks the output against the sources to catch remaining hallucinations.

This is sometimes called a “grounding pipeline” — and it’s the core architecture behind AI agents that don’t hallucinate. The specifics vary (what retrieval systems you use, how you compose the prompt, whether you add verification), but the pattern is consistent.

The key insight: grounding is an architectural concern, not a prompt engineering trick. You can’t reliably ground a model by telling it “only use facts.” You need infrastructure that provides those facts.

Notice that step 1 is the hardest. Knowing when to retrieve and what to retrieve requires understanding the query’s intent. A question about “how to configure authentication” needs docs. A question about “what’s the current subscription price” needs live data. A good grounding system handles this routing automatically — the agent doesn’t need to know the implementation details of each data source.

Grounding vs. fine-tuning

This is a common source of confusion. Fine-tuning and grounding solve different problems:

Fine-tuning changes the model’s behavior — its tone, reasoning style, domain vocabulary, output format. You’re adjusting how it thinks by training on task-specific examples. But the facts it knows still come from training data. Fine-tuning a model on medical terminology doesn’t keep it current on drug interactions.

Grounding changes the model’s information at query time. You’re giving it access to current facts without modifying the model itself. The model’s behavior stays the same, but its answers reflect real data instead of training patterns.

The decision framework is straightforward:

- Need factual accuracy about things that change? Use grounding. Current docs, live data, version-specific APIs — grounding handles these because it provides facts at inference time.

- Need the model to behave differently? Use fine-tuning. Domain-specific output formats, specialized reasoning patterns, company tone — fine-tuning handles these because they’re behavioral.

- Building a production system? You probably need both. Fine-tune for behavior, ground for facts.

Fine-tuning without grounding gives you a model that sounds like a domain expert but still hallucinates about current data. Grounding without fine-tuning gives you accurate facts delivered in a generic style. The combination is where production systems land.

Grounding in practice with MCP

The Model Context Protocol (MCP) makes grounding practical by standardizing how AI agents connect to external data sources. Instead of building custom integrations for every model and every data source, MCP defines a common interface: data sources expose “tools” through MCP servers, and AI agents query them through a standard protocol.

This matters for grounding because it means you can compose multiple grounding sources without custom integration code. A coding assistant can pull library docs from one MCP server and live API data from another — same protocol, same agent, different data. And because MCP is an open standard, you’re not locked into any particular vendor or model provider.

Here’s what a practical grounding setup looks like with MCP:

{

"mcpServers": {

"context": {

"command": "npx",

"args": ["-y", "@neuledge/context"]

}

}

}This configuration gives your AI agent access to local documentation through @neuledge/context — a tool that indexes library docs into local SQLite databases and serves them via MCP. The agent gets version-specific documentation with sub-10ms queries, no cloud dependency, and no rate limits.

For live data grounding, @neuledge/graph provides a semantic data layer that connects agents to operational data sources — pricing APIs, inventory systems, databases — through a single lookup() tool with pre-cached responses and structured JSON output.

The combination covers both grounding categories: static documentation via Context, live operational data via Graph. Both run locally, both expose tools through MCP, and both work with any AI agent that supports the protocol. Check the integrations page for setup guides with Claude Code, Cursor, Windsurf, and other editors.

Getting started

Start with the type of grounding that matches your biggest pain point:

- Your AI keeps using wrong API versions → Ground it with local documentation. Index the docs for the exact versions you use, serve them to your assistant via MCP.

- Your AI needs live data (prices, statuses, inventory) → Ground it with a data layer. Connect your operational APIs and let the agent query structured facts instead of guessing.

- Your AI hallucinates entirely → Read the hallucination prevention architecture guide for the full four-layer approach.

Grounding isn’t optional for production AI systems. Research shows that RAG-based grounding alone reduces hallucinations by 42–68%, and combining grounding with verification can push accuracy even higher. An ungrounded agent is a liability — it will confidently deliver wrong answers that look right. A grounded agent is a tool — it delivers answers based on your data, your docs, your reality.

Start grounding your LLM today:

- Install @neuledge/context for documentation grounding

- Install @neuledge/graph for live data grounding

- Read the docs and integration guides for setup with your editor

- Compare grounding tools to understand your options